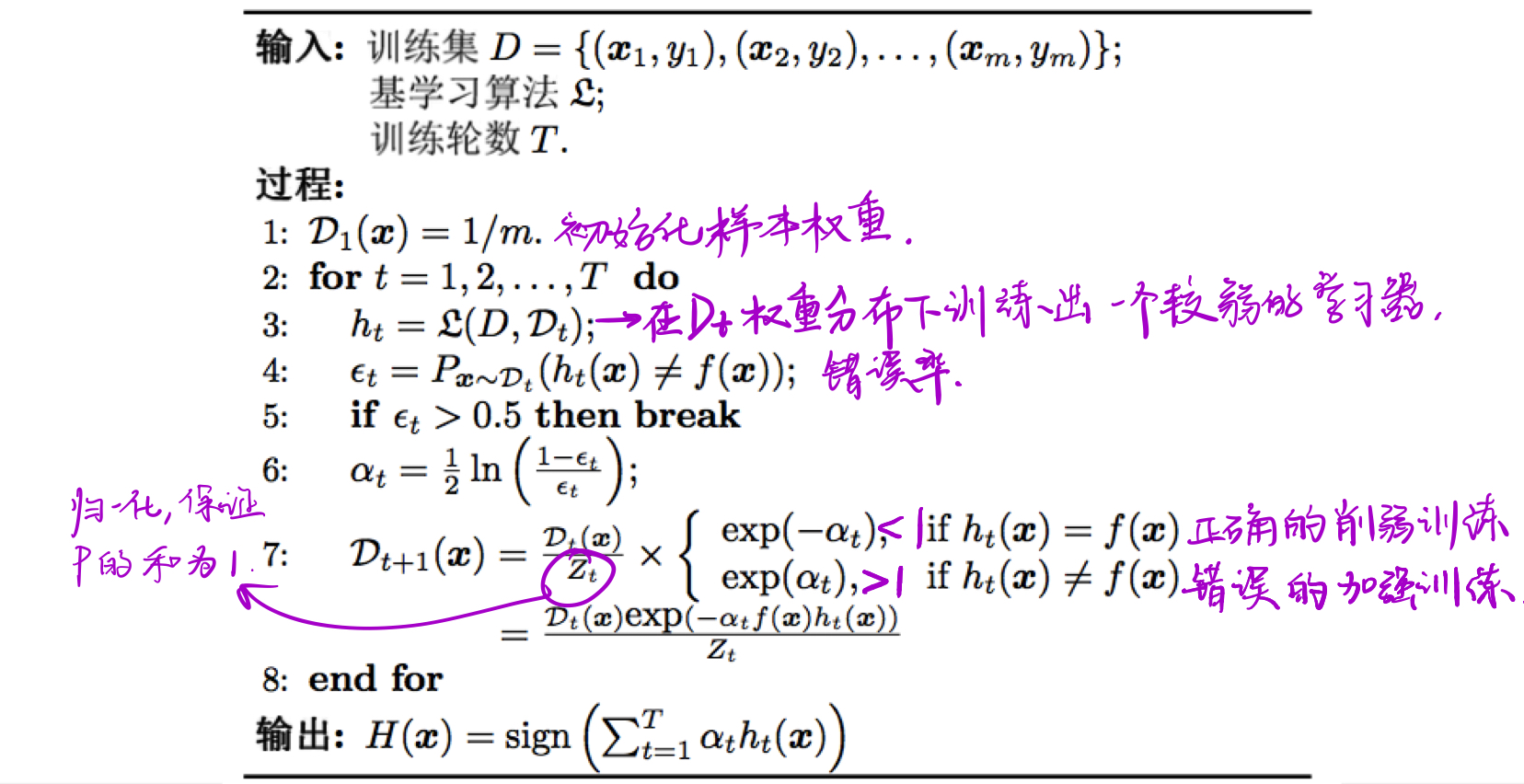

关于Adaboost Adaboost算法是针对二分类问题提出的集成学习算法,是boosting类算法最著名的代表。当一个学习器的学习的正确率仅比随机猜测的正确率略高,那么就称它是弱学习器,当一个学习期的学习的正确率很高,那么就称它是强学习器。而且发现弱学习器算法要容易得多,这样就需要将弱学习器提升为强学习器。Adaboost的做法是首先选择一个弱学习器,然后进行多轮的训练,但是每一轮训练过后,都要根据当前的错误率去调整训练样本的权重,让预测正确的样本权重降低,预测错误的样本权重增加,从而达到每次训练都是针对上一次预测结果较差的部分进行的,从而训练出一个较强的学习器。

实现 首先展示理论算法描述。

按照上述步骤开始用代码实现。

首先是初始化样本权重,初始他们的权重都是相等的,都是样本数分之一。代码如下所示。

1 2 n_train, n_test = len (X_train), len (X_test) W = np.ones(n_train) / n_train

然后在规定的训练轮数下,在相应的样本权重下对弱分类器进行训练。

1 Weak_clf.fit(X_train, Y_train, sample_weight=W)

然后在测试集进行预测,并且计算出不正确的样本数。

1 2 3 4 miss = [int (x) for x in (pred_train_i != Y_train)] miss_w = np.dot(W, miss)

下面就是根据预测的结果,对预测正确的样本权重进行削弱,对预测错误的样本权重进行加强,从而对样本对权重进行更新,用于下一次学习器的训练,公式代码如下所示。

1 2 3 4 5 6 7 alpha = 0.5 * np.log(float (1 - miss_w) / float (miss_w + 0.01 )) factor = [x if x == 1 else -1 for x in miss] W = np.multiply(W, np.exp([float (x) * alpha for x in factor])) W = W / sum (W)

最终输出的H(x)要对每个也测结果乘alhpa然后加入到结果的列表中。

1 2 3 4 pred_train_i = [1 if x == 1 else -1 for x in pred_train_i] pred_test_i = [1 if x == 1 else -1 for x in pred_test_i] pred_test = pred_test + np.multiply(alpha, pred_test_i)

最后对于列表中大于0 的值认为它预测为标签值1,小于0的值认为它预测为比标签值0。

1 2 3 pred_test = (pred_test > 0 ) * 1 return pred_test

从而完成了Adaboost的一次训练过程。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 weak_clf = DecisionTreeClassifier(criterion='entropy' , max_depth=2 ) acc = [] pre = [] rec = [] f1 = [] Data = data.copy() kf = KFold(n_splits=10 , shuffle=True , random_state=0 ) for train_index, test_index in tqdm(kf.split(Data)): train_data = Data[train_index] test_data = Data[test_index] x_train = train_data[:, :8 ] y_train = train_data[:, 8 ] x_test = test_data[:, :8 ] y_test = test_data[:, 8 ] scaler = StandardScaler() scaler.fit(x_train) x_train = scaler.transform(x_train) scaler.fit(x_test) x_test = scaler.transform(x_test) pred_test = my_adaboost(weak_clf, x_train, x_test, y_train, y_test, epoch) acc.append(accuracy_score(y_test, pred_test)) pre.append(precision_score(y_test, pred_test)) rec.append(recall_score(y_test, pred_test)) f1.append(f1_score(y_test, pred_test)) print ("My Adaboost outcome in test set with {} epoch:" .format (epoch)) print ("ACC:" , sum (acc) / 10 ) print ("PRE: " , sum (pre) / 10 ) print ("REC: " , sum (rec) / 10 ) print ("F1: " , sum (f1) / 10 )

整体的代码如下:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 def my_adaboost (Weak_clf, X_train, X_test, Y_train, Y_test, Epoch ): """ :param Weak_clf: :param X_train: :param X_test: :param Y_train: :param Y_test: :param Epoch: :return: """ n_train, n_test = len (X_train), len (X_test) W = np.ones(n_train) / n_train pred_train, pred_test = [np.zeros(n_train), np.zeros(n_test)] for i in range (Epoch): Weak_clf.fit(X_train, Y_train, sample_weight=W) pred_train_i = weak_clf.predict(X_train) pred_test_i = weak_clf.predict(X_test) miss = [int (x) for x in (pred_train_i != Y_train)] miss_w = np.dot(W, miss) alpha = 0.5 * np.log(float (1 - miss_w) / float (miss_w + 0.01 )) factor = [x if x == 1 else -1 for x in miss] W = np.multiply(W, np.exp([float (x) * alpha for x in factor])) W = W / sum (W) pred_train_i = [1 if x == 1 else -1 for x in pred_train_i] pred_test_i = [1 if x == 1 else -1 for x in pred_test_i] pred_test = pred_test + np.multiply(alpha, pred_test_i) pred_train = pred_train + np.multiply(alpha, pred_train_i) pred_train = (pred_train > 0 ) * 1 pred_test = (pred_test > 0 ) * 1 return pred_test